How to Trust Again

Deepfakes, Misinformation, and a Possible Way Out

Reality is easier than ever to fake—politicians 1, rappers 2, even people and places that don’t exist 3. It’s spooky, sometimes kooky, but also dangerous—and getting more so by the month. In a textbook example of Brandolini’s law 4, synthetic media is significantly cheaper to produce than to debunk, creating an uphill arms race of tools used to synthesize realistic media and methods to detect them 5.

The broader issue, however, is much older than deepfakes—it’s misinformation. The next time you watch an online video, perhaps one depicting current events, ask yourself the following questions:

- When was it filmed?

- Where was it filmed?

- Was it cut, modified, or altered in any way?

- Was it produced by a physical camera? What camera?

It’s a painful realization: leaving any of these questions unanswered opens up a potential avenue for misinformation. And how can we tell? Shall we look for artifacts left by digital manipulation? Scrutinize over minutiae in the background of a video, looking for a species of grass that shouldn’t be there 6? It doesn’t have to be this way. At least, it shouldn’t have to be this hard.

For certain use cases, we could use a change of perspective. Instead of detecting deception directly, we could instead focus on verifying authenticity by giving devices the tools they need to produce trustworthy media. Implemented correctly, such a system would be one of the most powerful tools we have in the fight against deliberate misinformation. Here’s one way we could do it.

Before We Begin…

A necessary consequence of the methods described below is the creation of immutable public records. Therefore, special care must be taken to consider and protect the privacy of all involved parties. This system has an unambiguous and ethical use case for canonically public content like news broadcasts and addresses delivered by government officials. However, the ethics can get murky if you stray too far from these applications.

In short, it might not be the best idea for this technology to be accessible from your pocket. It’d be nice to confirm found footage of Bigfoot, but Bigfoot deserves privacy too.

What: Origin Verification

Raw digital video footage, audio recordings and photographs are, at their core, untouched approximations of reality—measurements. Unfortunately, the term “real” 7 8 9 risks mistaking a measurement for the reality it attempts to approximate. Instead, we’ll borrow from the field of digital forensics and create a more palatable (if not less wordy) term: sterile non-synthetic media.

You’re likely familiar with synthetic media 10 . In short, synthetic media is anything that isn’t a pure, unadulterated measurement. This includes unambiguously synthetic media like animated squirrels, synthesized voices, and deepfakes, but also more subtle examples like cropped photographs, audio with lossy compression, or even a video with an overlaid logo.

Sterile media 11—as the name may suggest—is untouched, delicate, and perhaps unwieldy; a single flipped bit may invalidate its trustworthiness (and we all know how easily-flipped some bits are). Ironically, it’s confidence in the sterility of non-synthetic media that will comprise the bedrock of trust in a media-verifiable world.

If this much can be accepted, then there exists only one way forward; verifying the origin of our measurements requires a shift in the way we manufacture the instruments that record them. What this means in practice is an Internet of… ah. This already exists, doesn’t it?

Yes, video cameras are IoT devices now (or is it the other way around?), and they have been for a while. With the help of trusted platform modules (TPMs), 12 public-private key pairs can be securely generated on-device and yield certificates that bind the identity of the device in question—not unlike IoT devices in public key infrastructures 13.

The formula to create origin-verifiable media, then, is to sign it. Like secure web browsing, this necessitates the existence of trusted third parties, a role that could be filled by existing certificate authorities like IdenTrust, DigiCert and Let’s Encrypt 14.

When: Temporal Verification

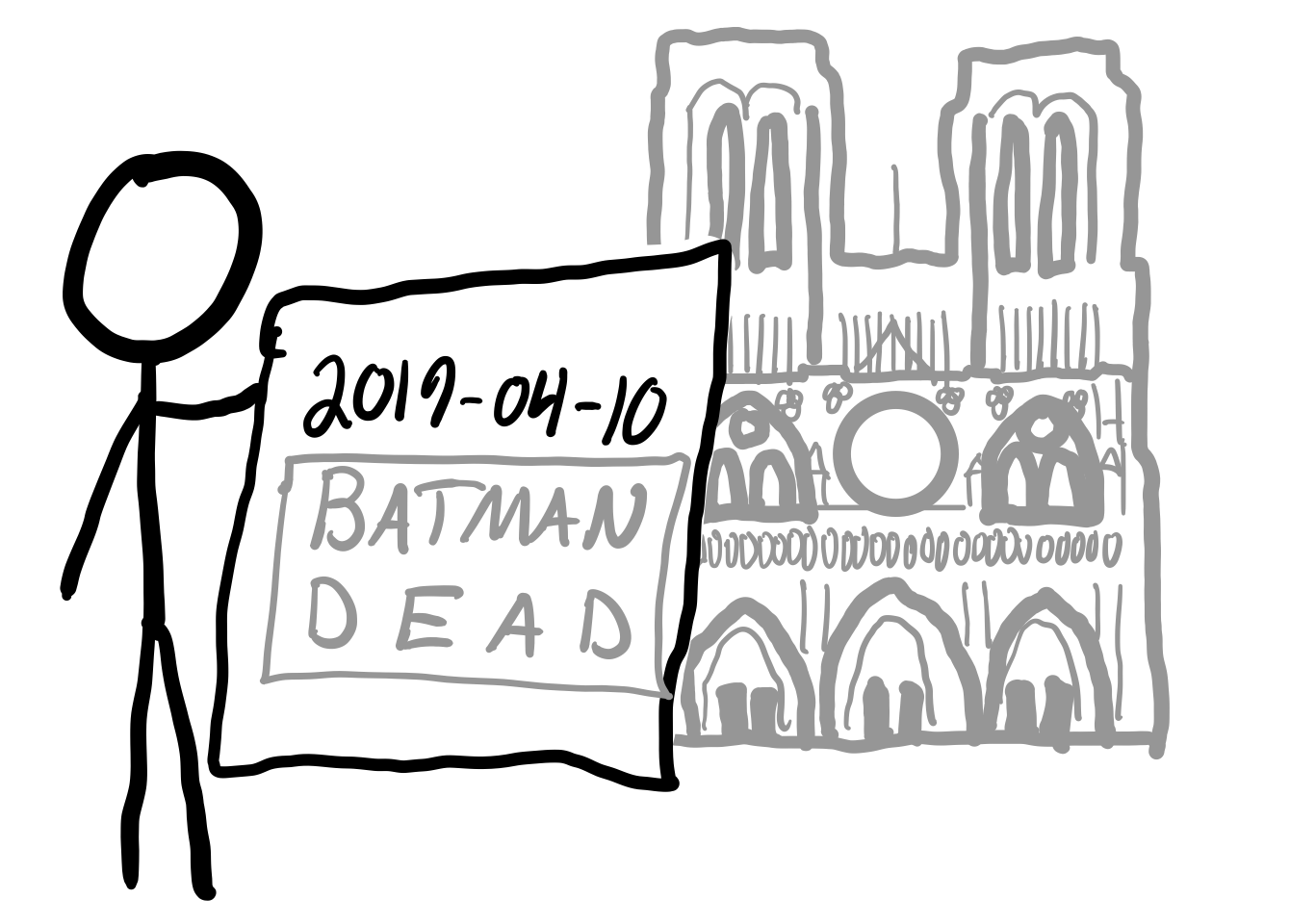

Consider the following hypothetical video recovered from a dead video camera:

A man stands next to the famous cathedral of Notre-Dame in Paris. He holds the front page of a newspaper to the camera. Dated April 10, 2019, this headline isn’t the one we needed; the superhero known as Batman has died.

Without the aid of digital metadata, can you deduce when this video must have been filmed?

While not as precise as a digital timestamp, we can combine simple clues with well-known, uncontroversial information to deduce a range of time that this video must have been filmed. The easiest place to start is the newspaper: printed shortly after Batman’s death, the newspaper tells us that the video must have been filmed on or after April 10, 2019. Undoubtedly useful, this information alone still leaves a large—and growing—range of ambiguity: we have no upper bound better than today.

The missing piece of the puzzle comes from the cathedral of Notre-Dame in the background; unbeknownst to the world at the time of Batman’s death, the beloved Parisian cathedral would burn down just five days later. Many mourned the destruction of an important cultural landmark, but we can be thankful for the flames: this information confirms that the video must have been filmed between April 10 and April 15, 2019. Importantly, we intuitively trust the dates we’ve deduced, perhaps even more than the video’s accompanying digital metadata. Bien!

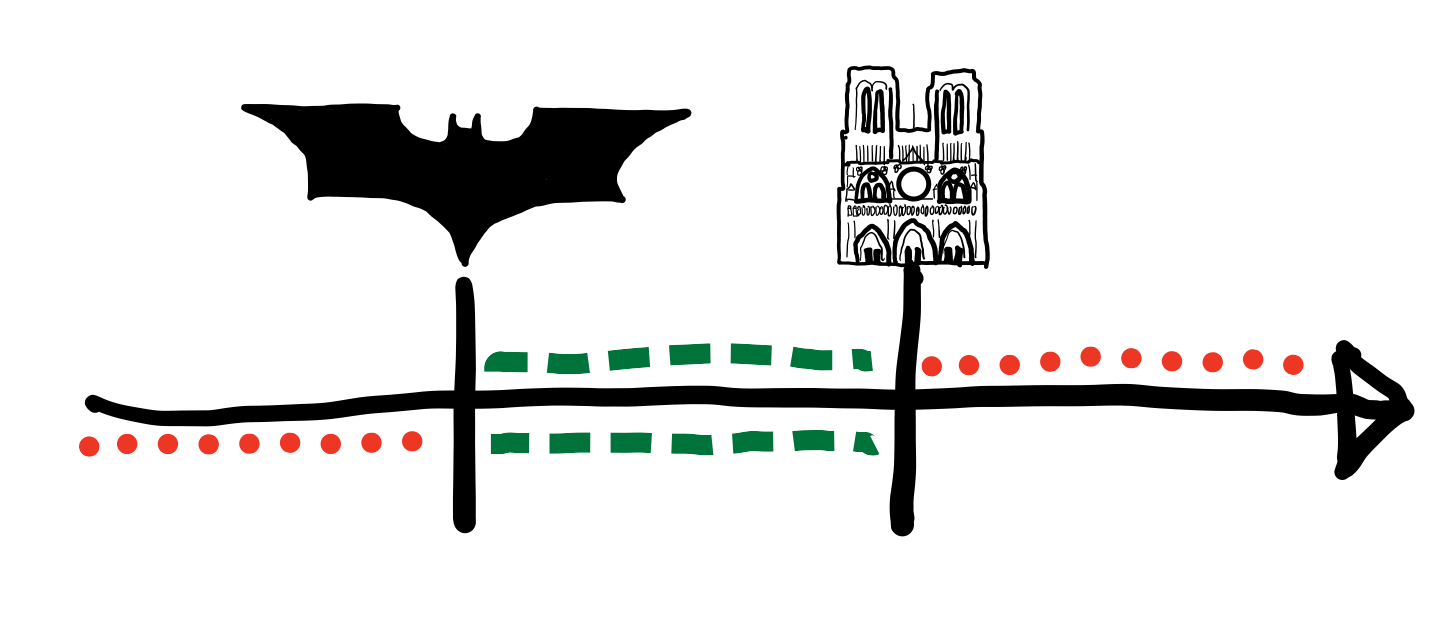

Relying on the deaths of heroes and the destruction of cathedrals, while exciting, isn’t the most productive way to date arbitrary digital media. Thankfully, the same logic used to create intuitive trust in our specific example can be applied to derive cryptographic trust in the general case. What we need is:

- Newspaper: Public information that can’t be guessed ahead of time

- Notre-Dame: A public, append-only database

The technology that most conveniently satisfies these roles is our old friend, the blockchain 15, which, for all intents and purposes, can be thought of as a public, append-only database 16 of information that cannot be guessed ahead of time 17. More specifically, our metaphorical newspaper becomes the most recent block, and our Notre-Dame becomes a block that we upload ourselves.

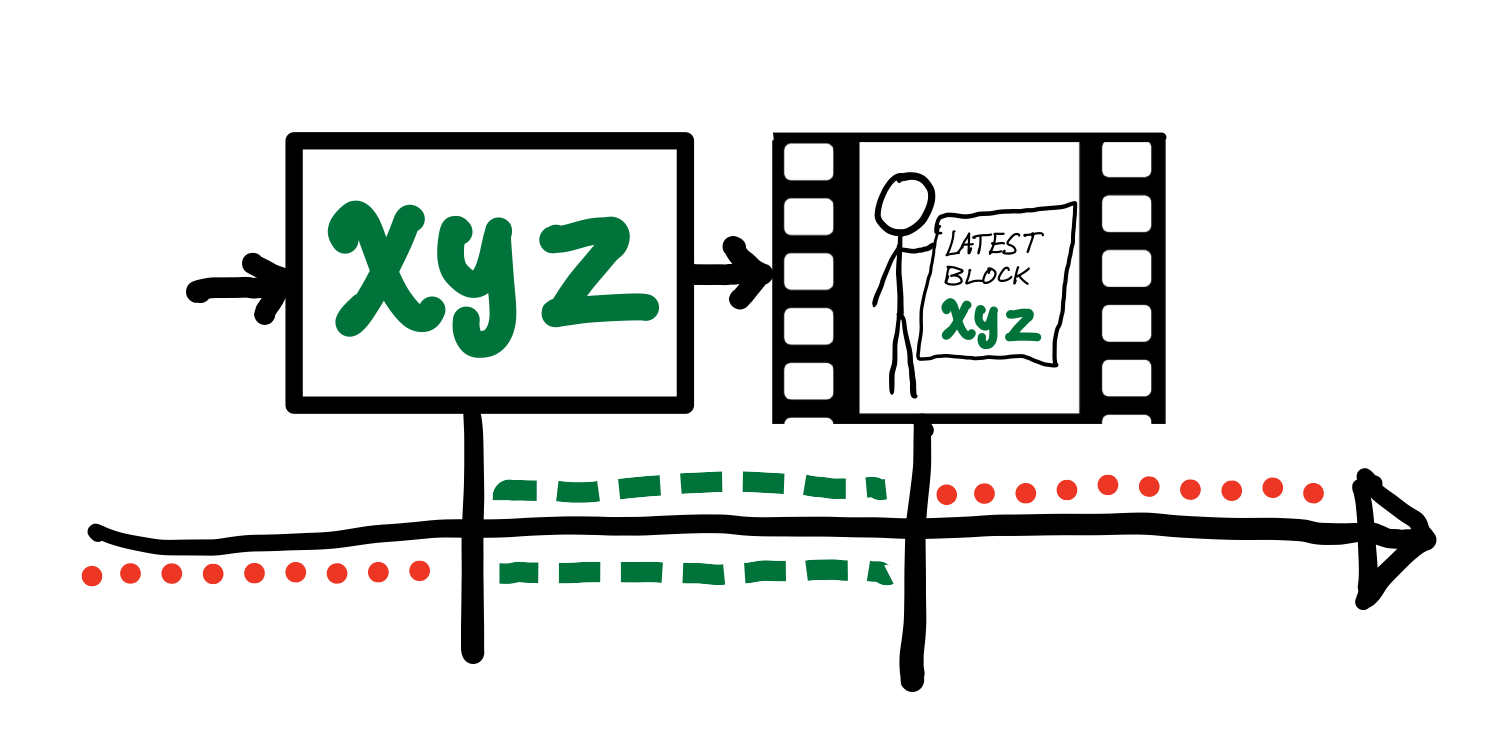

A back-of-the-napkin sketch of these ideas might look something like the following:

| Creating Time-Verifiable Media |

|---|

Input: Unfinished non-synthetic media mu, Blockchain c, Device d |

Output: Time-verifiable non-synthetic media m |

bc ← Latest block of c |

hbc ← Hash(bc) |

Embed hbc in mu by filming it or speaking it out loud |

Complete mu |

m ← Sign(mu, d) |

hm ← Hash(m) |

Upload hm to c |

Return(m) |

| Dating Time-Verifiable Media |

|---|

Input: Time-verifiable media m, Blockchain c |

Output: Range of time that m was created |

hbc ← Hash of bc embedded in m |

bc ← FindBlock(hbc, c) |

hm ← Hash(m) |

bm ← bc |

While bm does not contain hm: |

bm ← NextBlock(bm, c) |

Return(Timestamp(bc), Timestamp(bm)) |

Conveniently, blockchain-based temporal verification gives us sterility detection (i.e. “have any bits been flipped since the original was uploaded?”) for free; if the hash of our questionable time-verifiable media doesn’t show up in a previously-uploaded block, then we don’t have a copy of the original. If a matching hash is found, an accompanying digital signature can be used to verify the device that originated the media.

Where: Location Verification

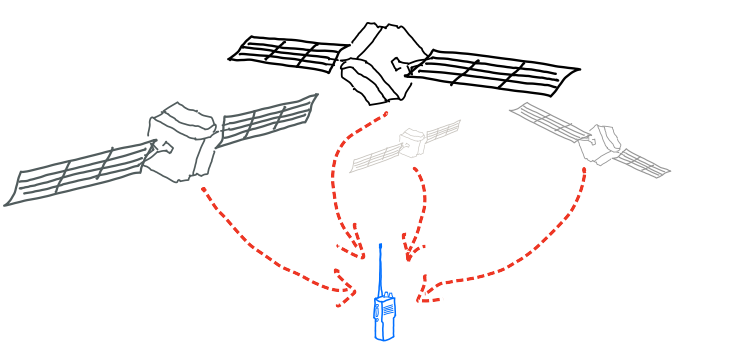

The Global Positioning System (GPS) and systems like it work by broadcasting one-way, spray-and-pray radio signals from a network of satellites. Specially-calibrated devices receive these signals and, using all information at their disposal, deduce their own location 18. Unfortunately, this means that GPS yields only half of the trust we need; it allows us to trust our own location, but does nothing to convince others of it—especially in retrospect. The solution to this problem is not to amend existing satellite navigation systems. Aside from the usual pitfalls of introducing trust as an afterthought of design 19, a deeper problem lurks in the design itself: our signals are traveling in the wrong direction.

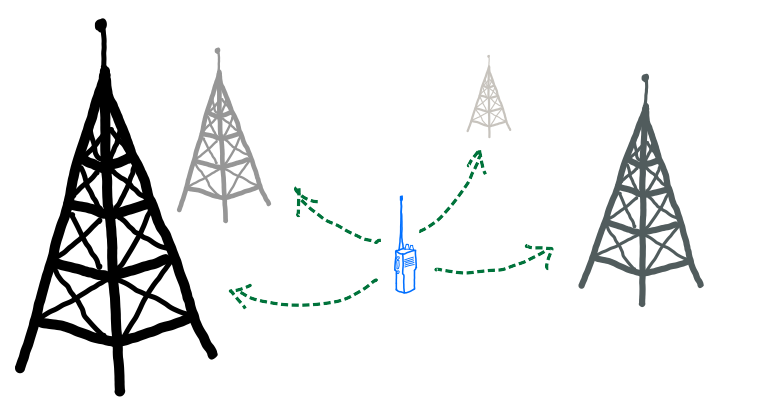

To rephrase: location-verifiable devices shouldn’t consume information from trusted infrastructure, they should instead convince our trusted infrastructure of it. Crowdsourced networks similar to Apple’s Find My might address this in an attractively distributed way, but crowdsourced networks depend on the participation of crowds 20. Another way of implementing location verification is with a technology fittingly known as reversed or inverted GPS 21 22, which places receivers on our trusted infrastructure (e.g. radio towers) and emitters on the devices we’d like to track. When needed, inverted GPS-compatible devices can emit cryptographically signed messages to be received, triangulated and verified by our trusted infrastructure.

| Creating Location-Verifiable Media |

|---|

Input: Origin-verifiable device d, Trusted infrastructure t |

| Output: Location-verifiable media |

Begin recording location-verifiable non-synthetic media m |

While m is unfinished: |

Deduce d’s location lgps 23 with GPS |

Have d sign and broadcast b, a message containing lgps |

Receive b with t, deducing lt 23 in the process |

Return(m) |

| Verifying Location-Verifiable Media |

|---|

Input: Origin-verifiable device d, Trusted infrastructure t, Location-verifiable media m |

| Output: Trusted location data |

lt, lgps ← GetLocationData(t, d) |

Verify(lgps, d) |

Compare(lgps, lt) 23 |

Return(lt) |

Potential Drawbacks

No system is perfect, and this informally specified collection of ideas I’ve referred to as a system is no exception. Feel free to get in touch if you find additional vulnerabilities—especially showstopping ones.

Truth Can Be Misleading

| Problem | Possible Solutions |

|---|---|

| Intentionally short or out-of-context media can mislead by omitting important information. | This will always be a problem. |

Measurement Processing

| Problem | Possible Solutions |

|---|---|

| Many recording devices (e.g. cell phone cameras) perform heaps of computation, guesswork, and even AI-assisted modifications before yielding a final result. | Consumers of devices that produce verifiable media devices shouldn’t expect nor trust anything that delivers more than simple measurements. “Raw” measurements may be a somewhat nebulous concept for digital devices (e.g. RAW photos are neither standardized nor unprocessed), but this is not a showstopper. |

Long Timeframes

| Problem | Possible Solutions |

|---|---|

| Date-verifiable media may present an unnecessarily long creation window, reducing its utility. | Genuine date-verifiable media has an incentive to present a creation window whose length is close to its runtime (e.g. foo minutes for a foo minute-long video, and near-zero for photos and other static media). Creation windows that unreasonably exceed this threshold could be doing so deceptively. |

Misleading Variants

| Problem | Possible Solutions |

|---|---|

| Misleading variants of existing date-verifiable media can be created, misleading some into believing that the genuine original was created later than it actually was. | Knowledge of the genuine original eases this threat in the long term, especially when combined with location and origin verification. |

Time and Infrastructure

| Problem | Possible Solutions |

|---|---|

| A possible side effect of trusted inverted GPS infrastructure is blockchain-free time-verification. This might be difficult to utilize in practice, however, as inverted GPS infrastructure will likely be centralized and sparse—in other words, difficult to trust for some and difficult to use for most. | Unfortunately, a large, secure and decentralized network of inverted GPS towers is an unrealistic expectation, if even possible. Temporal verification, at least, can always be decoupled from location verification. |

How to Trust Again

In 1984, Ken Thompson published Reflections on Trusting Trust 24:

The moral is obvious. You can’t trust code that you did not totally create yourself. (Especially code from companies that employ people like me.) No amount of source-level verification or scrutiny will protect you from using untrusted code.

Ken’s warning is relevant here in more ways than one, and it most certainly doesn’t allude to a future of trustworthy, verifiable media. After all, can you really trust anything you haven’t totally observed or deduced yourself?

Perhaps not, but it won’t stop people like me from trying anyways.

Acknowledgements

Thanks to Ayaz Hafiz 25, Amir Rahmati 26, Jake Scott, Zachary Espiritu, Andrey Chudnov, Shashank Kedia, and Jennifer’s kitchen table for their feedback and support.

https://www.newsweek.com/ai-voice-generator-tiktok-kanye-west-video-1593597 ↩︎

https://techcrunch.com/2020/09/14/sentinel-loads-up-with-1-35m-in-the-deepfake-detection-arms-race/ ↩︎

https://isr.umd.edu/news/story/deepfake-detection-invention-discerns-between-real-and-fake-media ↩︎

https://edition.cnn.com/interactive/2019/01/business/pentagons-race-against-deepfakes/ ↩︎

https://www.theverge.com/2019/3/3/18244984/ai-generated-fake-which-face-is-real-test-stylegan ↩︎

https://subscription.packtpub.com/book/security/9781838648176/4/ch04lvl1sec22/creating-sterile-media ↩︎

https://azure.microsoft.com/en-us/blog/installing-certificates-into-iot-devices/ ↩︎

https://www.oreilly.com/library/view/mastering-blockchain/9781788839044/445f138c-e0de-430a-bf9c-3911ad4e7277.xhtml ↩︎

This, of course, is a hand-wavy oversimplification https://en.wikipedia.org/wiki/Global_Positioning_System ↩︎

Consider, for example, a modified GPS system that has satellites digitally sign their transmissions. At first glance, this change might appear to prevent GPS spoofing and empower devices to prove their location at a certain time. It actually does neither. ↩︎

—and the security of whatever protocol underpins them https://www.motortrend.com/news/researchers-show-bluetooth-signal-relay-can-open-start-tesla/ ↩︎

https://www.researchgate.net/publication/2840564_Experiments_of_Inverted_Pseudolite_Positioning ↩︎

dmight not trusttto be truthful aboutlt; forcingtto bundlelgpswithlthelps in this case ↩︎ ↩︎ ↩︎https://www.cs.cmu.edu/~rdriley/487/papers/Thompson_1984_ReflectionsonTrustingTrust.pdf ↩︎